INFERENCE

ECONOMICS

Enterprises must balance maximum AI value against rising computational costs. Inference, the process of running data through a trained model, presents a distinct and recurring economic challenge.

Scaling Challenge

As models generate more tokens to solve complex problems, the infrastructure must scale efficiently to prevent costs from skyrocketing.

ECONOMICS OF AI

Understanding the shift from capital-intensive training to usage-based operational inference expenses.

Inference economics marks the shift from a one-time capital expense for model training to a recurring operational cost incurred with every model query. Unlike training, which might cost $100,000 once, inference at $0.01 per query scales to $10,000 monthly for a million queries.

Proof-of-concept costs are poor predictors of production expenses, with a typical scaling factor of 717x. Controlled pilot environments hide the realities of organic usage, traffic spikes, and error retry loops that drive real-world costs.

This discrepancy often leads projects into 'proof-of-concept purgatory,' where the business case evaporates once true production costs become clear.

METRICS

Defining key operational units for performance evaluation.

Evolving Laws

Pretraining

The foundational law: increased data, parameters, and compute yield predictable improvements. A one-time, capital-intensive investment.

Post-training

Fine-tuning for specific applications. Enhances accuracy through techniques like retrieval-augmented generation (RAG) for enterprise data.

Test-time Scaling

"Long thinking" or reasoning. Models allocate extra compute during inference to evaluate multiple outcomes. Generates more tokens for complex tasks.

Smarter AI requires generating more tokens, and a quality user experience demands generating them as fast as possible.

717X COST SPIRAL

Why PoC budgets fail to predict production expenses

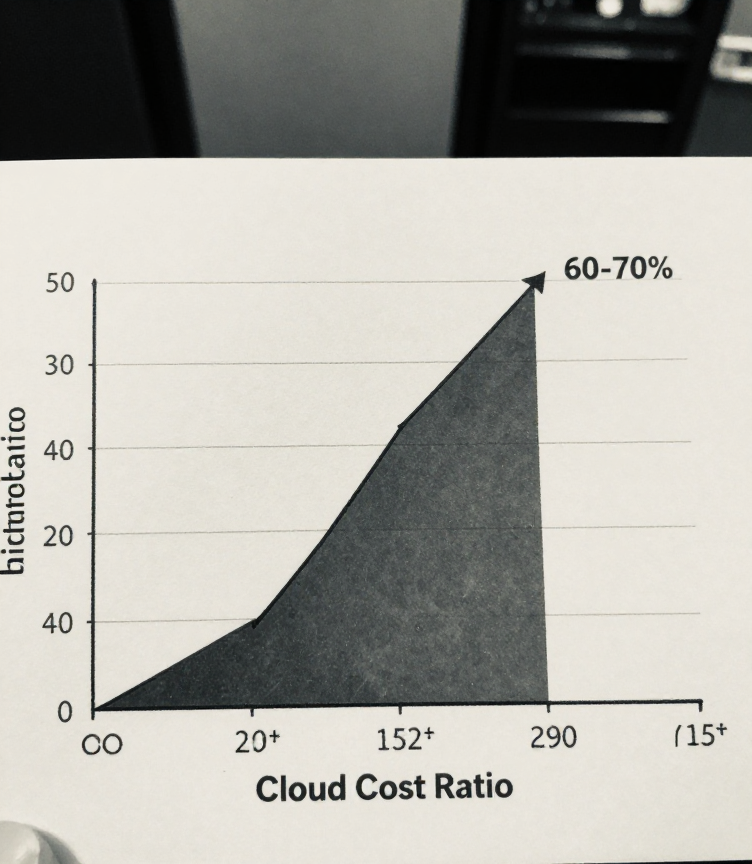

Volume Effect

Cloud costs hit a tipping point at 60-70% of equivalent on-premises systems. Past this, staying in the cloud becomes economically inefficient.

Scaling Factor

Organizations report AI costs increasing 5-10x within months as "feature creep" sets in. PoC tests one use case; production demands many more.

TIPPING POINT

Defining the Economic Inflection

Utilization Metric

Workloads hitting 60-70% of equivalent on-premises costs signal a tipping point. Predictive traffic patterns allow for accurate forecasting, making cloud elasticity premiums unnecessary.

Cost Efficiency

For 24/7 AI inference, this threshold is often met immediately. Roughly 25% of tech leaders shift workloads at 26-50% cost difference, highlighting financial imperatives.

INFERENCE COSTS

Identifying primary cost components

01 Compute & Model Size

70B+ models cost 10x more per token than 7B models. Size dictates base expense.

02 Architectural Complexity

Transformer mechanisms scale quadratically. Doubling context quadruples cost.

03 Latency & Concurrency

Supporting 100 simultaneous users requires 10x the infrastructure of 10 users.

04 Data Egress Fees

Often overlooked fees for data movement can be 15-30% of the total bill.

OPERATIONAL TACTICS

Strategic Combinations for Efficiency

1. Caching

"Free money." A 50% cache hit rate doubles infrastructure capacity. Saving $75k-$150k monthly for high-volume systems is achievable by avoiding redundant calls.

2. Quantization

Reducing precision (e.g., FP32 to INT4) cuts compute needs by 4-8x with minimal accuracy loss. Translates to a 60-80% cost reduction instantly.

3. Intelligent Routing

Align compute cost with query complexity. Routing 70% of simple queries to smaller models reserves expensive resources for the remaining 30%.

THREE-TIER ARCHITECTURE

Cloud (Flexibility)

New features, geographic expansion, and burst capacity needs are managed here.

On-Premises

Runs production inference for stable, high-volume, predictable workloads.

Edge (Sovereignty)

Handles ultra-low latency and data sovereignty requirements with local processing.

42% of organizations favor a balanced approach, optimizing different objectives with varied infrastructure types.

FINIS

Strategic Mastery of AI Economics

The Golden Rule

"Plan for recurring operational costs, not just one-time training. Sustainable AI requires a rigorous focus on inference efficiency."

Balance throughput and latency against unit costs.

Monitor cost-per-query to avoid non-linear scaling spirals.

Leverage hybrid infrastructure for optimal production scale.