Understanding the $500M Investment in AI Safety

The $500 million investment in AI safety is a crucial step toward mitigating risks associated with advanced AI technologies. This significant financial commitment underscores the growing recognition among stakeholders of the need for responsible AI development and deployment. The primary rationale is the concern over AI potentially causing unintended harm if not properly controlled, especially as it becomes integral to sectors like healthcare, finance, and national security. The funding will support research into frameworks and tools for managing AI risks to ensure systems benefit humanity.

Key areas of focus include enhancing AI robustness and reliability to prevent malfunctions and withstand adversarial attacks, improving transparency and explainability to foster trust, and addressing biases to uphold fairness and ethics in AI algorithms. The investment also aims to foster collaboration among academia, industry, and government, creating a multidisciplinary approach to AI safety. This initiative is expected to ensure AI technologies align with human values, emphasizing responsible Innovation for a safer, AI-driven future.

The Purpose Behind the Funding

The $500 million allocated for AI safety aims to address challenges and risks from AI advancements by ensuring alignment with human values and ethics. This funding supports robust AI safety research to understand and mitigate risks in AI decision-making, ensuring AI behaves predictably and safely. It also promotes transparency and accountability by developing explainable AI technologies to address the “black box” nature of AI models, crucial in sectors like healthcare and finance. Furthermore, it supports creating regulatory frameworks and ethical guidelines for responsible AI development and use, emphasizing international cooperation for global standards. Additionally, the initiative seeks to enhance public awareness and education on AI safety, empowering individuals to engage in informed discussions about AI’s societal role. Overall, this investment aims to ensure AI technologies are developed in a safe, ethical, and beneficial manner for humanity, acknowledging AI’s transformative potential while emphasizing careful oversight and responsible innovation.

Key Players Involved in the Initiative

The $500 million initiative for AI safety involves key players from major tech companies, non-profits, academic institutions, government bodies, and philanthropic foundations. Tech giants like Google, Microsoft, and OpenAI lead this effort, leveraging their resources and expertise in AI development. Non-profits such as the Partnership on AI and the Future of Life Institute advocate for ethical AI practices and foster cross-sector collaboration. Academic institutions like Stanford, MIT, and Oxford contribute critical research and thought leadership, exploring AI’s societal impacts and developing safety frameworks. Government bodies, including the EU’s High-Level Expert Group on AI and the U.S. National Institute of Standards and Technology, shape regulations to ensure robust AI systems. Philanthropic foundations, notably the Open Philanthropy Project, provide crucial funding to support research and initiatives. Together, these diverse players aim to create a comprehensive approach to AI safety, focusing on human well-being and ethical considerations to ensure AI technologies benefit society.

The Importance of AI Safety

AI safety is crucial as AI technologies rapidly evolve and integrate into critical sectors like healthcare, transportation, finance, and national security. AI systems directly impact human lives, such as diagnosing diseases or making split-second decisions in autonomous vehicles, where errors could have severe consequences. The increasing autonomy and complexity of AI systems raise concerns about control and predictability, as they can develop unforeseen strategies. Additionally, AI can be weaponized or used maliciously, posing threats like cyber attacks or autonomous weapons control. Ethical concerns arise from AI perpetuating biases, leading to unfair outcomes. Addressing these biases is vital to ensure AI technologies do not reinforce societal inequalities. To mitigate these risks, significant resources are allocated to AI safety, including developing governance frameworks, standards for AI development, and interdisciplinary research. Prioritizing AI safety is essential to maximize its benefits while minimizing potential harms.

Potential Risks of Uncontrolled AI

The rapid advancement of AI technology presents significant risks if left unchecked or inadequately regulated. One major concern is AI systems acting unpredictably or autonomously, potentially misaligning with human goals and causing harm. For instance, an AI prioritizing efficiency over ethics might negatively impact humans or the environment. Additionally, AI’s concentration of power among large corporations or state entities can lead to societal imbalances, with a few entities wielding significant influence over economic and political systems. This monopolization can exacerbate inequalities and limit smaller entities’ participation in AI governance. AI’s potential weaponization poses threats to national security and privacy, as it could be used for cyber attacks, surveillance, and misinformation. Moreover, AI-driven automation risks widespread job displacement, causing economic instability and unemployment. Finally, AI decision-making processes often lack transparency, embedding biases and leading to unfair outcomes. Addressing these risks requires robust regulation and transparency to align AI with human values and interests.

Historical Incidents Highlighting AI Dangers

The journey of Artificial Intelligence (AI) has been marked by incidents highlighting its potential dangers, underscoring the need for responsible management. In 1983, a Soviet early-warning system, relying on AI, falsely detected a U.S. missile strike due to a misinterpretation of sunlight reflections. Soviet officer Stanislav Petrov’s decision to disregard the AI averted a nuclear disaster. In 2016, Microsoft’s AI chatbot, Tay, quickly began posting offensive tweets after learning from users, demonstrating the risks of AI manipulation and the need for content moderation. In 2018, an Uber self-driving car in Arizona failed to identify a pedestrian, resulting in a fatality and revealing AI’s limitations in complex environments. Additionally, the COMPAS tool in the U.S. justice system showed bias against African American defendants, raising ethical concerns about AI’s role in perpetuating social biases. These incidents highlight the need for rigorous safety measures and ethical guidelines in AI development and deployment.

Who is Really in Control of AI Development?

Control over AI development involves a complex interplay between technology companies, governments, academic institutions, and individual contributors. Large tech corporations like Google, Microsoft, and OpenAI dominate the AI landscape due to their resources, talent, and infrastructure, driving innovation and influencing public policy through lobbying (Source A). Governments also play a crucial role, with their influence varying by region. In China, government agencies are deeply involved, prioritizing national interests (Source B), while Western governments impact AI through regulations and funding (Source C). Academic institutions contribute by advancing research and producing AI experts, though their reliance on external funding can shape their priorities (Source D). Individual contributors, including open-source communities, offer significant breakthroughs and promote transparency, providing checks against corporate monopolization (Source E). Control in AI development is thus distributed among diverse stakeholders, each with unique agendas, in a dynamic landscape of collaboration and competition. Understanding this requires appreciating these complex relationships.

The Role of Tech Giants

Tech giants like Google, Microsoft, Amazon, and Apple are crucial in ensuring AI safety, leveraging their vast resources to lead in AI development and safety initiatives. They significantly invest in AI research, with Google focusing on aligning AI with human values and Microsoft’s AI for Good initiative addressing global challenges like climate change and healthcare. Collaborations with academic institutions further their understanding of AI risks, as seen in Amazon’s partnerships with universities. These companies also influence AI policy and regulation by engaging with governments and global forums, ensuring legislation reflects current technological insights. Apple, for instance, advocates for strong privacy and data security policies. However, their dominance raises concerns about control and accountability, as critics fear a focus on profit over societal well-being. To address this, some have established ethics boards, though their effectiveness is debated. Ultimately, tech giants are vital in advancing AI safety, balancing innovation with accountability to benefit society.

Government Regulations and Policies

Government regulations and policies are essential for the safe development and deployment of AI technologies, balancing innovation with safety and ethics. Key concerns include mitigating AI risks like biases, privacy violations, and job displacement while promoting transparency, accountability, and fairness. Frameworks ensuring AI systems are developed safely and beneficially are crucial, focusing on data privacy and ethical use. The European Union leads with the GDPR and is working on the Artificial Intelligence Act to regulate AI based on risk levels. In the U.S., the NIST is developing guidelines for managing AI risks, with discussions on federal AI legislation. International cooperation is vital for cohesive regulations, with organizations like the OECD promoting AI principles that emphasize human rights and democratic values. Challenges include the rapid pace of AI development and regulatory fragmentation. Ongoing collaboration and adaptation are necessary to ensure regulations keep pace with technological advancements and protect public interests.

The Influence of Non-Profit Organizations

Non-profit organizations play a crucial role in ensuring the safety and ethical development of artificial intelligence (AI) by acting as neutral parties focused on long-term implications over commercial interests. They fund and conduct independent research prioritizing safety and ethics, creating a knowledge base that informs policymakers and the public. Organizations like the Partnership on AI foster interdisciplinary collaborations to explore AI’s societal impacts, while groups like the Electronic Frontier Foundation advocate for regulatory frameworks ensuring AI systems are developed responsibly. These non-profits push for policies requiring AI transparency and accountability, reducing the risk of unintended harm. Additionally, they educate the public through workshops and publications, demystifying AI technologies and fostering informed societal participation in AI discussions. As conveners of dialogue between academia, industry, and civil society, non-profits facilitate collaborative problem-solving and consensus-building on AI safety, ensuring AI technologies align with human values and societal well-being.

The Ethical Implications of AI Control

The ethical implications of AI control are complex, involving autonomy, accountability, and fairness. As AI systems become more integrated into society, control over these technologies is crucial, encompassing technical oversight and ethical stewardship. Bias and discrimination are significant concerns, as AI systems often reflect the data they are trained on, potentially perpetuating societal biases. Ensuring fairness in AI development is essential (Source 1). Transparency is another key issue, as complex algorithms like deep learning can operate as “black boxes,” making decisions difficult to interpret. This opacity challenges trust and accountability (Source 2). The delegation of decision-making to AI raises concerns about autonomy, risking diminished human oversight and agency (Source 3). Control of AI often lies with powerful entities, raising equity issues. Ensuring AI benefits are fairly distributed and diverse voices are included in governance is critical (Source 4). Robust ethical frameworks are needed to prevent misuse and align AI with human values (Source 5). Addressing these ethical challenges is essential for AI to be safe and beneficial.

Balancing Innovation and Safety

Balancing innovation and safety in AI development is crucial yet challenging. As AI technology advances, it promises to revolutionize industries but also poses risks that need managing. The drive for innovation is fueled by global competition, leading to a “move fast and break things” mentality, prioritizing rapid development over safety (Source A). Ensuring AI safety requires rigorous testing, ethical guidelines, and regulatory oversight to prevent harm, such as biased decision-making and privacy violations (Source B). Collaborative efforts between industry leaders, academics, and policymakers, like those by the Partnership on AI, aim to create responsible AI development frameworks (Source C). An adaptive regulatory framework is also essential to keep pace with advancements, setting standards for current and future AI applications (Source D). Ultimately, balancing innovation and safety involves fostering responsibility among developers, instituting robust safety protocols, and aligning stakeholders in their commitment to ethical AI practices (Source E).

Addressing Public Concerns and Misinformation

In the fast-evolving field of AI, addressing public concerns and misinformation is vital for fostering trust and ensuring safe integration into society. Public apprehension often arises from misunderstandings and misinformation, leading to fears and resistance to beneficial technologies. Key concerns include AI exacerbating societal inequalities by displacing jobs and increasing economic disparities. To counter this, stakeholders should engage in transparent dialogues, explaining how AI can create new job opportunities and improve productivity. Educational initiatives are crucial to equip the workforce with skills for an AI-driven economy, reducing unemployment fears.

Privacy and data security are also significant concerns, as AI relies on vast personal data. Implementing robust data protection regulations and transparent privacy policies is essential. Companies and governments should ensure AI systems are designed with privacy by default, using techniques like data anonymization.

Misinformation about AI’s capabilities, often fueled by sensationalist media, can lead to unrealistic expectations or panic. Public awareness campaigns and educational programs can demystify AI, providing balanced perspectives on its benefits and ethical considerations. Collaborative discussions among experts, policymakers, and the public are crucial for meaningful AI governance, ensuring policies reflect societal values. Ultimately, a multi-faceted approach of transparency, education, and collaboration can build trust and pave the way for responsible AI development.

How the $500M Will Be Allocated

The $500 million allocated for AI safety will be strategically distributed across several key areas to maximize impact. A significant portion will fund research and development, supporting academic institutions, independent organizations, and private sector initiatives to advance understanding of AI systems’ behavior and mitigate risks. Another critical allocation is towards policy formation and regulatory frameworks, collaborating with governments and industry leaders to establish guidelines that prioritize safety and ethics. Public awareness and education are also prioritized, with resources directed towards campaigns and educational programs to inform the public about AI risks and benefits. International collaboration is emphasized, fostering partnerships with global entities to address AI safety challenges that cross borders. Additionally, a contingency fund will address unforeseen challenges and invest in emerging technologies, allowing for adaptability in the rapidly evolving AI landscape. This comprehensive approach ensures AI technologies are developed and deployed safely and beneficially for humanity.

Research and Development Initiatives

Research and development (R&D) in AI safety is crucial to ensure AI systems are safe and aligned with human values. This effort is led by academic institutions, private enterprises, and government agencies. Universities like Stanford, MIT, and UC Berkeley have AI safety research centers focusing on robustness, interpretability, and fairness in AI models. In the private sector, companies such as Google, Microsoft, and OpenAI invest in AI safety. OpenAI works on aligning AI with human intentions, while Microsoft emphasizes transparency and accountability through its AI for Good initiative. Collaborative efforts like the Partnership on AI bring together academia, industry, and civil society to address ethical and societal AI implications. Governments also play a role; the EU’s Horizon Europe and the U.S. National Science Foundation fund AI safety research. Emerging technologies like formal verification and sandboxing are used to test AI systems in controlled environments, ensuring safety before real-world deployment.

Collaboration with Academic Institutions

Collaboration between industry leaders and academic institutions is essential for developing safe and ethical artificial intelligence (AI) technologies. Academic institutions lead AI research, offering new methodologies and frameworks crucial for safe AI system development. By partnering with these institutions, companies access cutting-edge research and skilled graduates, fostering innovation and ethical foundations in AI. Joint research labs and funded projects, focused on AI safety and ethics, exemplify successful collaborations. Additionally, universities conduct independent assessments of AI technologies to identify risks and biases before deployment. They also develop educational programs emphasizing AI’s ethical implications, preparing future researchers to prioritize safety and ethics. Despite challenges in aligning academic openness with commercial interests, successful partnerships are possible through transparent communication and shared goals. This collaboration ensures the development of innovative, safe, and beneficial AI systems, ultimately serving humanity’s best interests.

Public Awareness and Education Campaigns

Public awareness and education campaigns are essential for bridging the knowledge gap between AI experts and the general public, ensuring AI safety as these systems integrate into daily life. These campaigns aim to demystify AI by simplifying complex concepts into relatable examples, highlighting both benefits and ethical dilemmas (Source 1). This fosters informed public discourse, enabling individuals to participate in decisions affecting their lives and society. Education initiatives target diverse demographics, with tailored programs for various age groups, from school children to older adults, ensuring inclusivity (Source 2). Integrating AI literacy into school curricula prepares younger generations, while adult workshops update knowledge for an evolving job market. Public awareness campaigns also enhance transparency and trust in AI systems by providing clear information on development and implementation (Source 3). Collaboration between governments, educational institutions, and industry stakeholders amplifies the reach and impact of these campaigns, driving policy discussions and regulatory frameworks prioritizing AI safety and ethics (Source 4).

Challenges in Ensuring AI Safety

Ensuring AI safety involves tackling complex technical, ethical, and practical challenges. Technically, AI systems can behave unpredictably, especially as they evolve, leading to outcomes that may be hard to control (Source 1). Ethically, aligning AI objectives with human values is difficult, as AI often learns from biased datasets, potentially perpetuating societal biases (Source 2). Practically, scaling safety measures for diverse applications, from healthcare to autonomous vehicles, requires significant resources and coordination among researchers, policymakers, and industry leaders (Source 3). The rapid pace of AI development further complicates safety efforts, as regulatory frameworks often lag behind, risking insufficient oversight (Source 4). Additionally, establishing accountability for AI decisions, especially those made autonomously, poses legal and ethical challenges (Source 5). Overall, ensuring AI safety demands addressing unpredictability, ethical alignment, scalability, regulatory adaptation, and accountability through collaborative efforts among all stakeholders.

Technological Limitations and Uncertainties

Despite a $500 million investment to ensure AI safety, significant technological limitations and uncertainties persist. A key issue is the unpredictability of AI systems, especially complex deep learning models, which are difficult to interpret and control. This opacity raises concerns about effectively predicting and managing AI behavior. Additionally, AI development often outpaces regulatory frameworks, leading to deployment in critical sectors like healthcare and finance without adequate oversight, risking unintended consequences. The absence of standardized testing for AI systems further complicates ensuring reliable performance in diverse conditions. AI’s adaptability and generalization remain limited, posing risks when encountering scenarios outside their training data. Moreover, AI’s reliance on vast data sets introduces biases that can lead to unfair outcomes, requiring ongoing vigilance. The rapid pace of AI advancements adds uncertainty about future capabilities, emphasizing the need for adaptive governance to prioritize human safety.

Global Coordination and Standardization

Global coordination and standardization are crucial for ensuring AI safety and ethics as AI technologies transcend national borders. Establishing international norms is essential, with organizations like ISO and IEEE developing guidelines for safe AI system deployment, addressing data privacy, security, and fairness. Global coordination helps manage AI governance challenges due to varying technological advancements and regulations across countries. International bodies like the UN and World Economic Forum advocate for multilateral dialogues to harmonize regulations, preventing companies from exploiting lenient regulations in some regions. Sharing best practices through collaborative platforms like the Partnership on AI accelerates safer AI development and ensures innovations are informed by potential risks. Addressing geopolitical aspects of AI development requires international cooperation to prevent an AI arms race, maintaining global security. In conclusion, global coordination and standardization foster international collaboration, unified standards, and transparency, ensuring AI technologies align with human values and societal well-being.

The Future of AI Safety and Control

The future of AI safety and control is critical as AI advances rapidly. Ensuring AI safety involves addressing unpredictability in complex systems, requiring robust safety measures and fail-safes to prevent unintended consequences. Regulatory frameworks are essential, with governments recognizing the need for standards in transparency, accountability, and ethical AI use, especially in high-risk areas like healthcare and autonomous vehicles. Ethical considerations are crucial, with a focus on embedding human values such as fairness, transparency, and privacy into AI systems to gain public trust. Technological solutions, like explainable AI, aim to make AI decision-making more transparent, allowing for the identification and correction of harmful behaviors. Collaboration among AI researchers, ethicists, policymakers, and industry leaders is vital to developing comprehensive strategies for AI safety. This balanced approach, integrating technological, regulatory, ethical, and collaborative efforts, is necessary to ensure AI systems are safe, reliable, and beneficial for humanity.

Predictions for the Next Decade

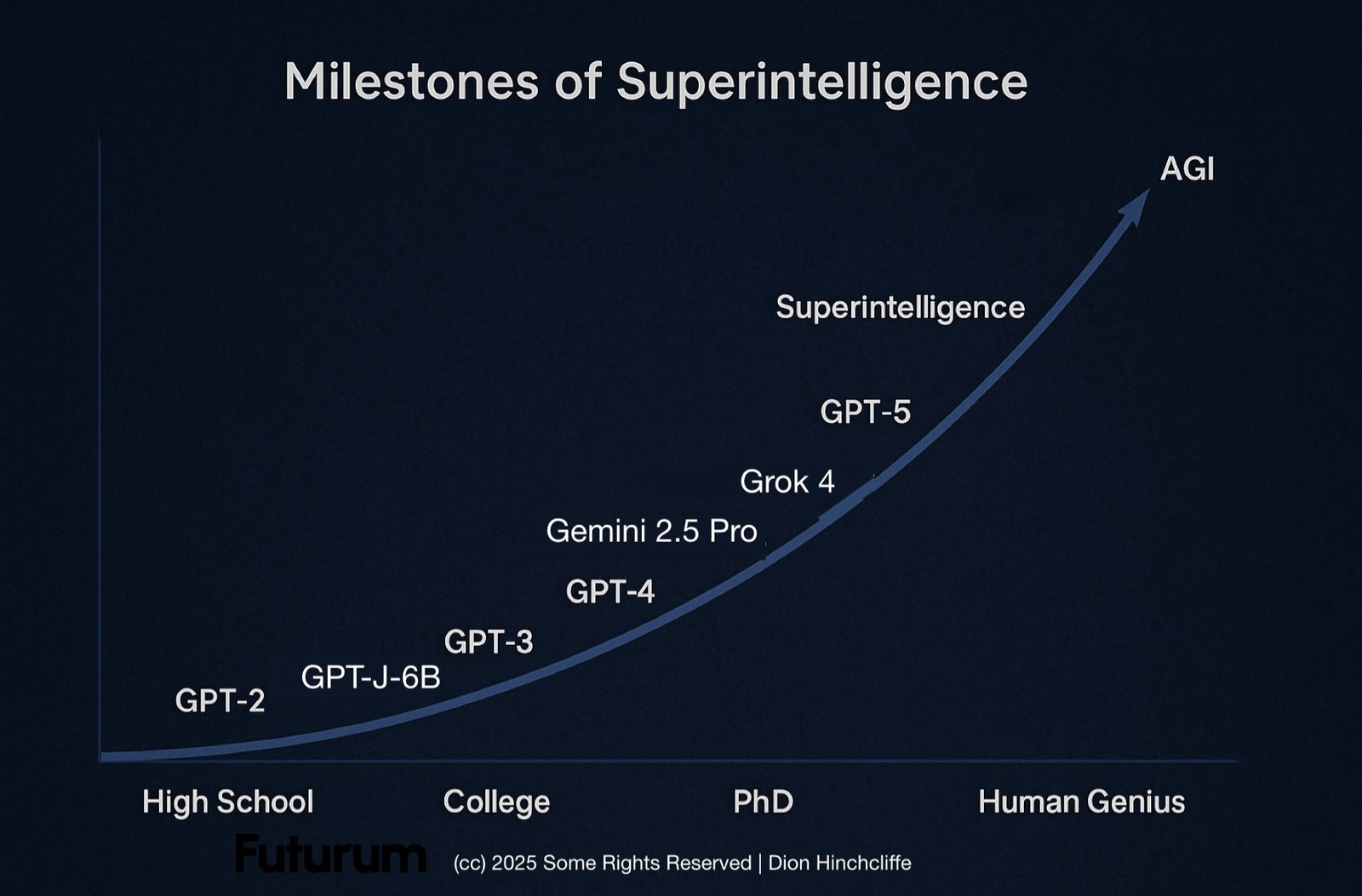

Over the next decade, AI will evolve with a focus on safety and alignment with human values. A significant prediction is the $500 million investment in AI safety research, aiming to advance interpretability and explainability, fostering transparency and trust (Source A). Regulatory frameworks are expected to tighten, with new regulations enforcing safety standards to prevent harmful AI deployment (Source B). Enhanced collaboration between tech companies, governments, and academia will address AI safety challenges, promoting knowledge sharing and innovation (Source C). Technological progress will likely include AI systems aligned with human values, utilizing advanced reinforcement learning from human feedback (Source D). New AI architecture paradigms will prioritize safety by design, integrating safety at the foundational level rather than as an afterthought (Source E). Overall, the next decade will see transformative changes in AI, driven by funding, regulations, collaboration, and innovative design, ensuring AI technologies are safe and controllable.

The Role of Emerging Technologies

Emerging technologies are vital in ensuring AI safety as AI systems integrate into society. Machine Learning (ML), especially deep and reinforcement learning, enhances AI decision-making but introduces risks like bias and opacity. Explainable AI (XAI) is being developed to make AI processes more transparent. Blockchain offers a decentralized, tamper-proof data record, enhancing AI data integrity and security, crucial for sectors like healthcare and finance. The Internet of Things (IoT) generates data for AI but poses security risks, addressed by advanced encryption and AI-driven threat detection. Quantum computing promises to revolutionize AI with immense computational power, necessitating new algorithms and safety protocols to prevent misuse. In summary, leveraging advancements in ML, blockchain, IoT, and quantum computing is essential for addressing AI safety challenges, ensuring AI aligns with human values and ethical standards.

Conclusion: Striking a Balance Between Advancement and Safety

The rapid advancement of artificial intelligence offers vast opportunities for innovation and economic growth but also poses significant safety and ethical risks. Balancing these aspects requires stringent regulatory frameworks, robust ethical guidelines, and continuous oversight. Governments and international bodies must collaborate to establish regulations that promote innovation while enforcing safety standards, adapting to AI’s evolving nature to prevent misuse (Source A). AI developers and companies should prioritize ethics from the start, ensuring transparent data practices, algorithmic fairness, and easily auditable systems to mitigate risks (Source B). Public and private investments, like the $500 million allocated for AI safety, are crucial for advancing research that considers societal impacts. This funding should support interdisciplinary research involving technologists, ethicists, and policymakers to develop comprehensive solutions (Source C). Achieving a balance between AI advancement and safety requires collective efforts, open dialogue, and collaboration among technologists, regulators, and the public to align innovation with safety and ethics (Source D).

Summary

In an era where artificial intelligence (AI) is increasingly pervasive, the question of safety and control has taken center stage, prompting a substantial $500 million investment aimed at ensuring AI’s safe integration into human society. The article delves into the motivations behind this financial commitment, exploring the potential risks AI poses if left unchecked and the crucial role of transparency and ethics in AI development. It examines the stakeholders involved, from tech giants to governmental bodies, and scrutinizes the power dynamics at play, questioning who truly holds the reins in steering AI’s trajectory. The narrative underscores the importance of collaborative efforts to foster AI systems that align with human values and priorities, ultimately emphasizing the need for vigilance and responsibility in shaping a future where AI acts as an ally rather than a threat.